About Me

I'm a passionate applied scientist with a PhD from UT Austin (it's my great honor to have been supervised by Prof. Atlas Wang) and a Bachelor's from BUPT. Currently at Amazon Search, I leverage my expertise in multi-modality LLM fine-tuning, large-scale ranker training, and recommendation system optimization to enhance customer experience. My work involves recommending relevant keywords to customers at a global scale, improving keywords suggestion acceptance rate and streamlining the overall experience for Amazon shoppers.

Interests

- Search Experience Optimization

- Large Language Models

- Graph Neural Networks

Education

-

The University of Texas at Austin

M.S. in Engineering, 2018 - 2020

Ph.D., 2020 - 2023 -

Beijing University of Posts and Telecommunications

B.Eng. in Communications Engineering, 2014 - 2018

Professional Experience

- Applied Scientist, Amazon, 2023 - present

- Developed and implemented large-scale keyword recommendation systems for Amazon Search, improving search suggestions acceptance rate and order purchase sales for a global audience.

- Utilized multi-modality LLM fine-tuning and large-scale ranker training to optimize candidate generation and ranking for Amazon's recommendation systems.

- Skilled in building and deploying recommendation systems, improving next search keywords prediction.

- Research Intern, The Home Depot, summer 2023

- Provide essential accessory recommendations in the Home Depot site, which are based on content that substitutes user behavior based recommendation.

- Leverage ChatGPT and other large language models to retrieve the recommendation product taxonomy. Evaluate recommendation recall between extractive QA, text classification and ChatGPT.

- Host(s): Dr. Omar ElTayeby, Dr. Haozheng Tian, Dr. Janani Balaji, Dr. Xiquan Cui

- Research Intern, Google Cloud, summer 2022

- Performance prediction, workload and platform matching for cloud computing.

- Host(s): Dr. Eric Zhang, Dr. Chelsea Llull

- Applied Science Research Intern, Amazon A9, summer 2021 - spring 2022

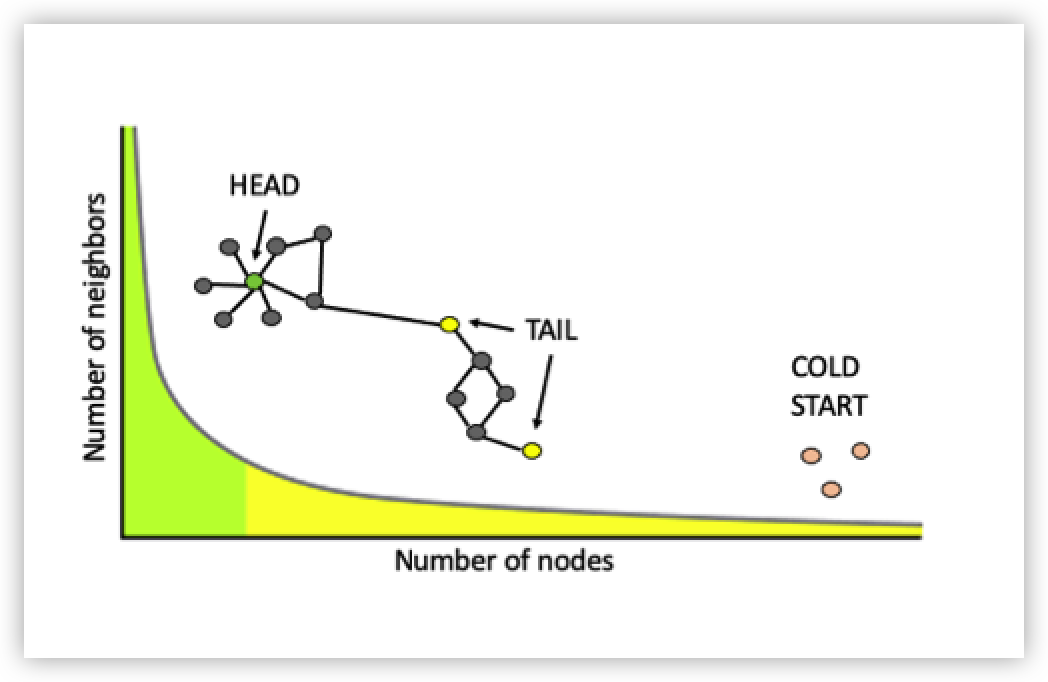

- Cold-start graph embedding learning for recommendation systems: first pretrain a graph model to generate versatile node embeddings using self-supervised learning, then learn a student model that is able to generalize to strict-cold-start nodes.

- Manager/Mentor(s): Dr. Karthik Subbian, Dr. Nikhil Rao

- Research Assistant, Visual Informatics Group, the University of Texas at Austin

- Advisor: Dr. Zhangyang (Atlas) Wang

- Research topics include:

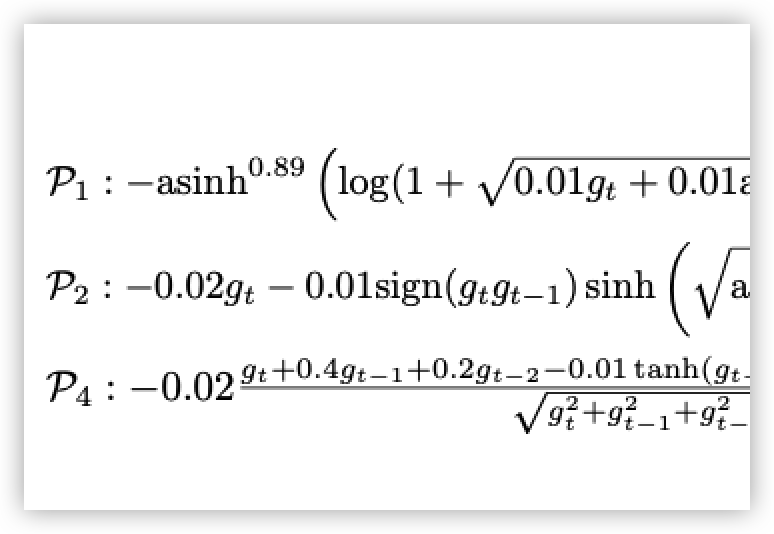

- Learning to Optimize: deliver faster and better optimizer for deep neural networks through back-propogate through the optimization procedure and find optimal optimizer in a data driven way.

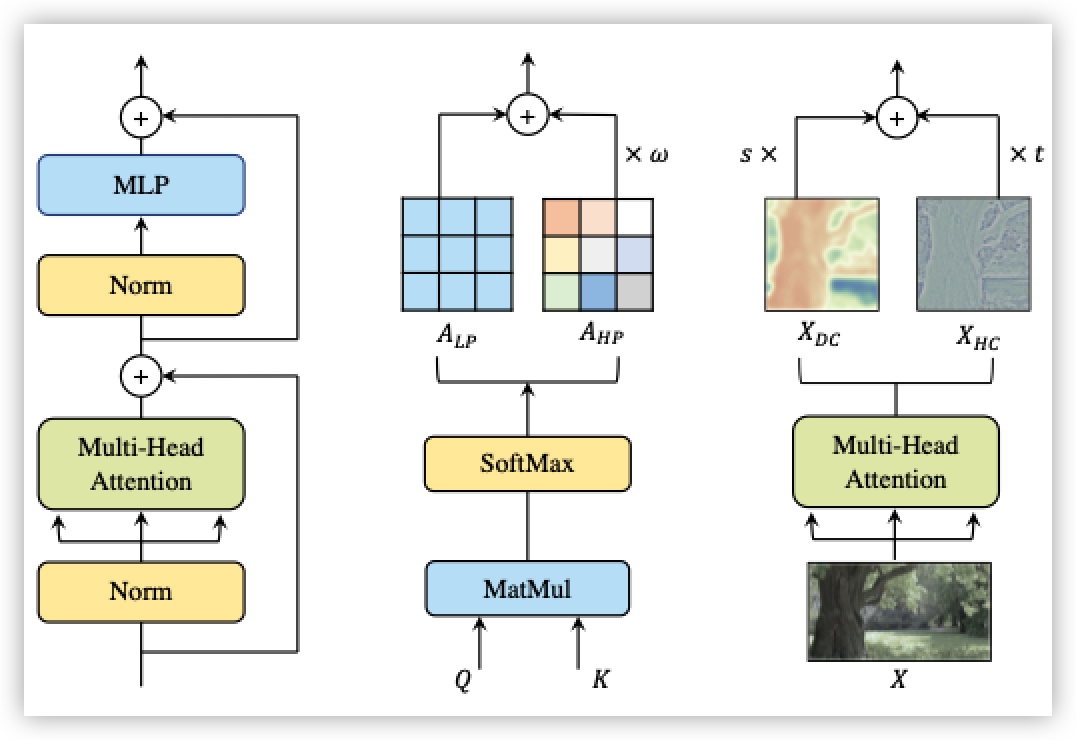

- Transformers: leverage the sequence modeling power of transformers in graph modeling/reinforcement learning.

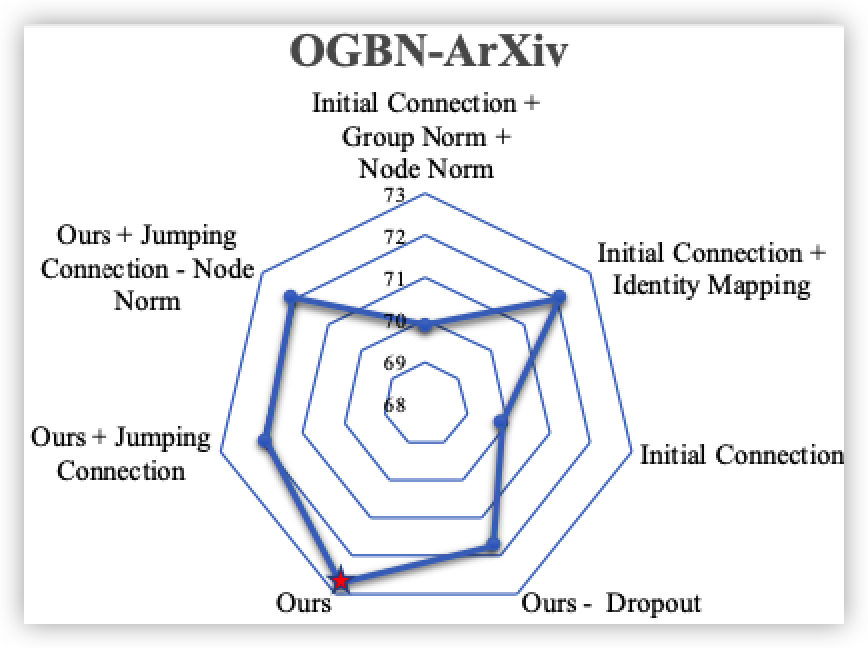

- Graph Networks: develop efficient graph models that scales and generalize.

- Research Assistant, WSIL Group, the University of Texas at Austin

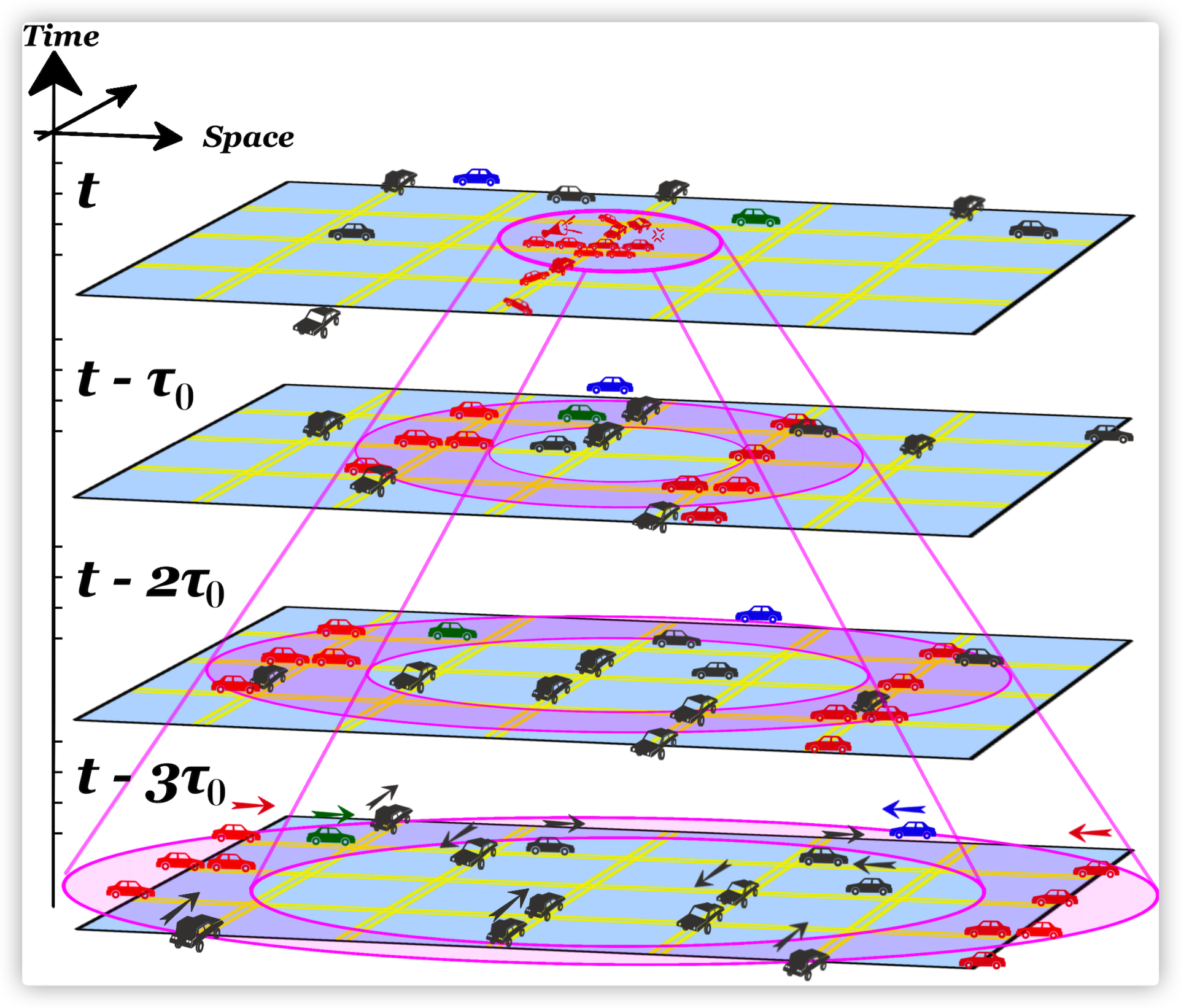

- Estimate user device position through 5G millimeter wave signal, using compressive sensing (Orthogonal Mathing Pursuit) and deep learning (ConvNets, swarm based learning to optimize) approaches.

- Advisor: Dr. Nuria González Prelcic

- Research Intern, GEIRI North America

- Train a Soft Actor-Critic agent to manage large scale power grid: embed the huge discrete geometric actionsinto continuous space; using Graph Neural Networks as preprocessing; Monte-Carlo Tree search as efficientexploration

- Mentor: Dr. Jiajun Duan

- Research Assistant, UWC Lab, Beijing University of Posts and Telecommunications

- Signal processing for wireless communications (Doppler spread estimation)

- Advisor: Dr. Wenjun Xu

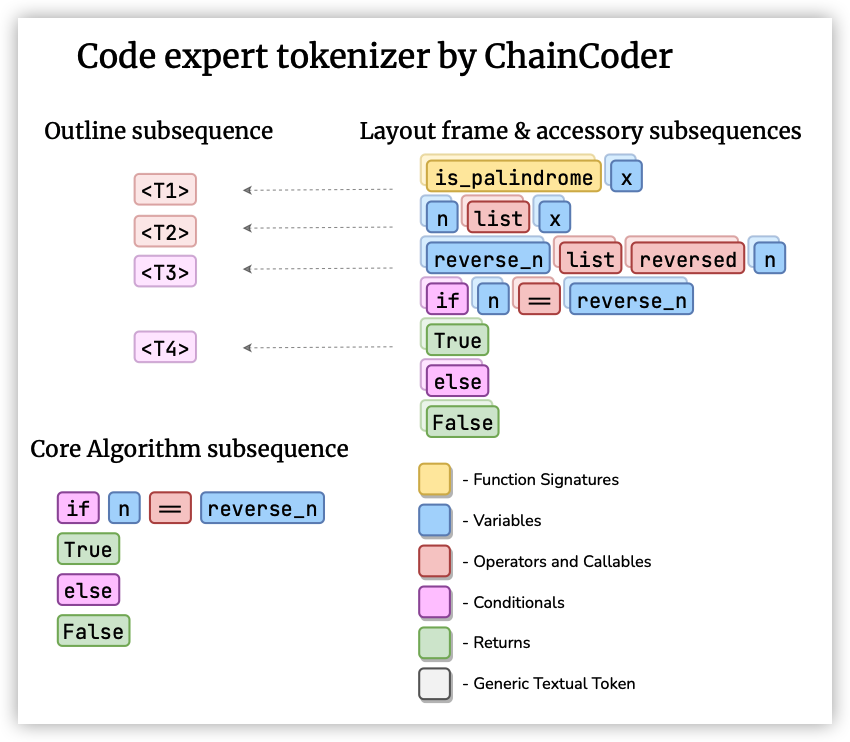

Selected publications

Contact

Related Links

- Visual Informatics Group @ the University of Texas at Austin VITA

- The University of Texas at Austin